Project goals

- Implement a context tracking functionality in the question answering system Aqqu.

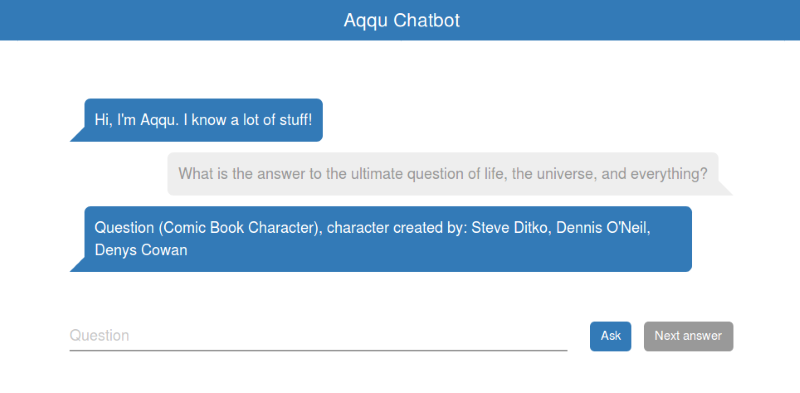

- Implement a conversational web user interface (Aqqu Chatbot).

- Develop an evaluation system for the context tracking.

- Evaluate the influence of context tracking on the overall performance of Aqqu.

Introduction

In this project, we focused on analyzing the influence of context on the question answering process, using the system Aqqu.

Aqqu processes questions independently from each other and gives isolated answers.

However, the context is very important in real life, and often one question is followed by additional or clarifying questions.

As an example, let us look at the following dialogue of a user with Aqqu, without taking context into account:

- User Who was Mozart?

- Chatbot Wolfgang Amadeus Mozart, profession: Composer, Pianist, Musician, Violinist, Violist

- User Who was he married to?

- Chatbot World Health Organization, founders: Brock Chisholm

The system did not know who he was; therefore, the system gave the best possible answer, where it interpreted Who as the abbreviation of the World Health Organization.

In the next example, we see a more human-like conversation:

- User Who was Mozart?

- Chatbot Wolfgang Amadeus Mozart, profession: Composer, Pianist, Musician, Violinist, Violist

- User Who was he married to?

- Chatbot Wolfgang Amadeus Mozart, spouse: Constanze Mozart

- User Where was she born?

- Chatbot Constanze Mozart, place of birth: Zell im Wiesental

The system understands who is meant by he and she, when the user substitutes the noun with a pronoun.

To make the question answering process more human-like, a simple context tracking functionality was integrated in Aqqu.

Aqqu

Aqqu is a question answering system. It was developed at the Chair for Algorithms and Data Structures from the Department of Computer Science, University of Freiburg by Prof. Dr. Hannah Bast and Elmar Haussmann [1]. Aqqu is available online and via Telegramm. The system is built on Freebase, but it is not adjusted to it, so it can be used with any knowledge base.

Aqqu usage

Aqqu can be used by making a request in a browser in the following form:

http://titan.informatik.privat:8090/?q=who played dory in finding nemo| http://titan.informatik.privat | specifies the backend that is used |

| :8090/ | the port |

| ?q=who played dory in finding nemo | the executed query |

The answer is displayed as JSON API. The API has the following structure:

{"candidates": [{"answers": [{"mid": string, "name": string}, … ],

"entity_matchess": [{"mid": string}, … ],

"features": {"avg_em_popularity": float,

"avg_em_surface_score": float,

"cardinality": float,

… },

"matches_answer_type": int,

"pattern": string,

"rank_score": float,

"relation_matches": [{"name": string, "token_positions": […]}, … ],

"root_node": {"mid": string},

"out_relations": [{"name": string,

"target_node": {"mid": string, "out_relations": […]}},

… ],

"sparql": string},

… ],

"parsed_query": {"content_token_positions": [int],

"identified_entities": [{"entity": {"mid": string, "name": string},

"perfect_match": boolean,

"score": int,

"surface_score": float,

"text_match": boolean,

"token_positions": [int],

"types": [string] },

…

],

"is_count": boolean,

"target_type": string,

"tokens": [{"lemma": string,

"offset": int,

"orth": string,

"tag": string},

… ]

},

"raw_query": string

}

Another way to run Aqqu is on a website with a convenient interface:

http://aqqu.informatik.uni-freiburg.deThe information on how to train, build and run the Aqqu system backend can be found under the following link:

https://ad-git.informatik.uni-freiburg.de/ad/AqquAqqu uses a docker, hence it is possible to create multiple different containers and to train the system with different parameters and datasets in each of these containers.

Context tracking

In natural language, context can often clarify the meaning of a question and simplify the search for an answer.

The Aqqu question answering process includes the following steps:

- Entity Matching

- Candidate Generation

- Relation Matching

- Features Extraction

- Candidate Pruning

- Ranking

The strategy of the system is to match as many entities as possible, score the matches and exclude the least relevant answers in the last steps. The main approach to implementing conversation following in this project is to store relevantly matched entities from the previous questions and add these to the set of identified entities of the current question, if the system finds a pronoun in the processed question. Therefore, Aqqu will also take the objects mentioned before into account. The additional entities can be seen as the necessary context for the system to figure out an answer.

The main approach for the conversation tracking consists of the following steps:

- Store the identified entities (ID and name) after the system gets a result.

- Look for pronouns in upcoming queries.

- If the processed query contains a pronoun - add the previous entities to the end of the query;

if it does not – treat the query as usual.

An example of a resulting url for a question that contains a pronoun could be:

http://titan.informatik.privat:8090/?q=where was he born&p=m.0jcx,Albert Einsteinp= indicates an additional entity, where m.0jcx is the entity ID and Albert Einstein is the entity name.

Also, more than one additional entity can be concatenated to the end of the url:

http://titan.informatik.privat:8090/?q=where was he born&p=m.0jcx,Albert Einstein&p=m.05d1y,Nikola TeslaIn this case, both Albert Einstein and Nicola Tesla are added to the identified entities.

The system stores both these entities in the query and in the results. This allows the system to also continue a conversation, if the following questions refer to some entity from the previous answers.

For example:

- User Where was Albert Einstein born?

- Chatbot Albert Einstein, place of birth: Ulm

- User Where is it?

- Chatbot (answer 1) Albert Einstein, location: Germany, Princeton, Munich, Bern

- Chatbot (answer 2) Ulm, contained by: Germany, Baden-Württemberg

The system does not make a separation between he, she, it or they. All pronouns are treated equally, therefore Aqqu interprets it as both Albert Einstein and Ulm and gives the corresponding answers.

The Aqqu backend with the conversational tracking functionality can be found under the link:

https://ad-git.informatik.uni-freiburg.de/ad296/Aqqu/tree/conversationalChatbot

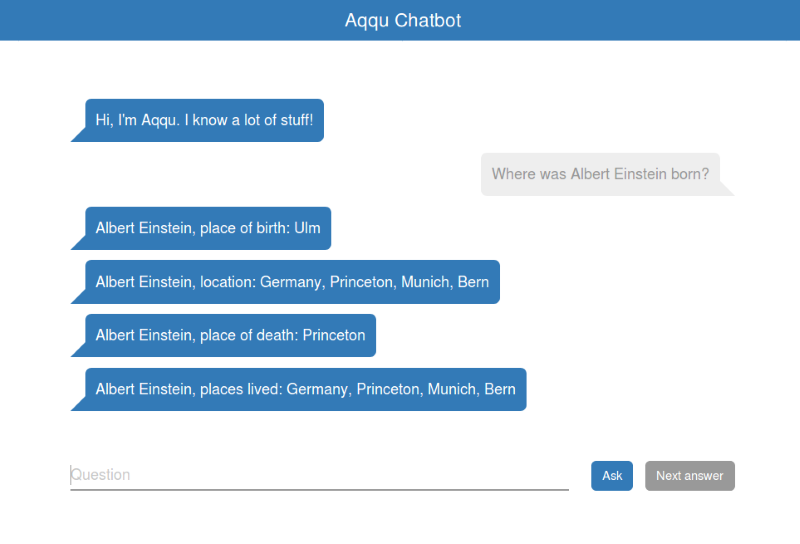

A conversational web UI in the form of a chatbot was developed within this project.

The Aqqu chatbot was built using Flask. The application takes the text from the Question field and sends a request to Aqqu’s backend, which is a docker container on a tapoa server. Aqqu gives an answer in the form of an API, which then is reshaped to a readable answer in the application. The application stores the entities in the cache, in order to process potential future questions with pronouns. The cache is always overwritten when the next question does not have pronouns. The chatbot always gives the answer with the highest rank first. To get another answer, the user can click on the Next answer button. When there are no possible candidates left, the application will report, that it has no alternative answers.

The format of the answer in the Aqqu Chatterbot is:

entity name,candidate relation match:all answers*

* all answers are presented sequentially and comma-separated

In the first answer from the above example, Albert Einstein is the entity name, place of birth is the candidate relation match and Ulm is the answer.

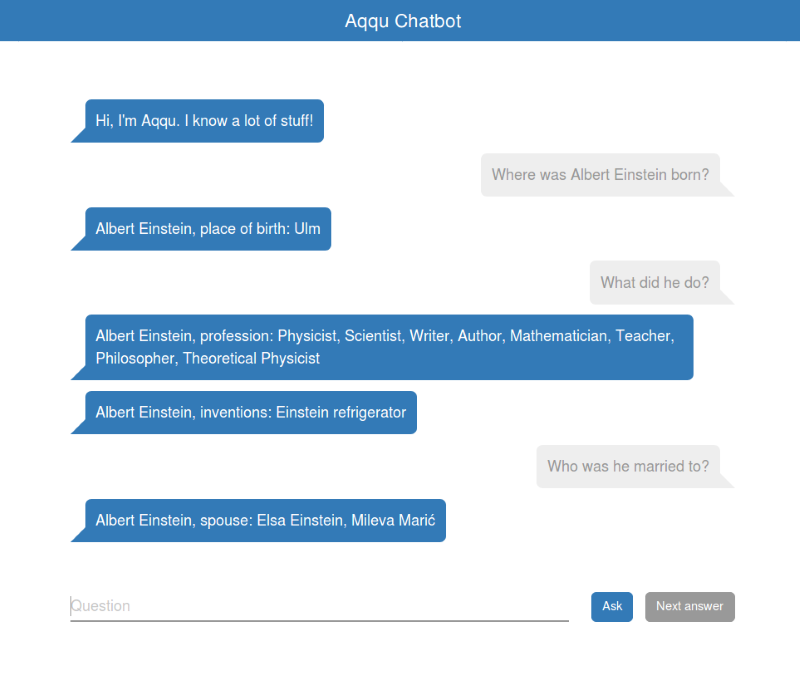

In the next picture, the context tracking is shown. The system recognizes that with he, the user means Albert Einstein and gives correct answers.

This behavior can cause some problems for questions where an entity as well as a pronoun are given.

For example:

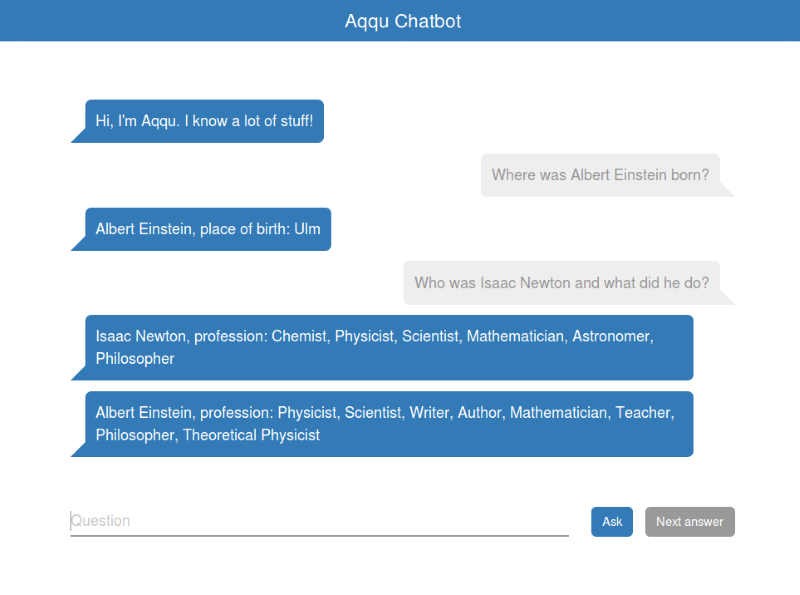

‘Who was Isaac Newton and what did he do?’

In this case the application will use both Isaac Newton and the previously stored entity.

In the following picture it is shown that the system has both identified Issac Newton and Albert Einstein. The answer referring to Isaac Newton got a higher rank – thus it is the first candidate.

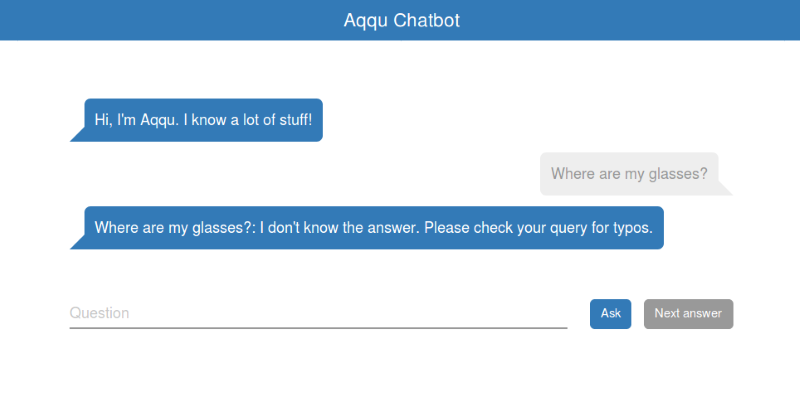

In the following picture the application has not found any suitable candidates.

Sometimes it gives wrong answers as well.

The chatbot code can be found under:

https://ad-git.informatik.uni-freiburg.de/ad296/AqquChatterbot/tree/noGenderDataset evaluation

For the evaluation of the system performance, the WebQSP dataset was used. The WebQSP dataset was split into training (70%) and testing (30%) datasets. It is the same split of the WebQSP that was used for the evaluation of the non-conversational Aqqu [1]. The original dataset consists of a list of questions.

This is an example of the dataset:

{"Version": "1.0", "FreebaseVersion": "2015-08-09", "Questions": [

{"QuestionId": "WebQTest-0", "RawQuestion": "what does jamaican people speak?", "ProcessedQuestion": "what does jamaican people speak",

"Parses": [

{

"ParseId": "WebQTest-0.P0",

"AnnotatorId": 0,

"AnnotatorComment": {

"ParseQuality": "Complete",

"QuestionQuality": "Good",

"Confidence": "Normal",

"FreeFormComment": "First-round parse verification"

},

"Sparql": "PREFIX ns: <http://rdf.freebase.com/ns/>\nSELECT DISTINCT ?x\nWHERE {\nFILTER (?x != ns:m.03_r3)\nFILTER (!isLiteral(?x) OR lang(?x) = '' OR langMatches(lang(?x), 'en'))\nns:m.03_r3 ns:location.country.languages_spoken ?x .\n}\n",

"PotentialTopicEntityMention": "jamaican",

"TopicEntityName": "Jamaica",

"TopicEntityMid": "m.03_r3",

"InferentialChain": [

"location.country.languages_spoken"

],

"Constraints": [],

"Time": null,

"Order": null,

"Answers": [

{

"AnswerType": "Entity",

"AnswerArgument": "m.01428y",

"EntityName": "Jamaican English"

},

{

"AnswerType": "Entity",

"AnswerArgument": "m.04ygk0",

"EntityName": "Jamaican Creole English Language"

}

]

},

{

"ParseId": "WebQTest-0.P1",

"AnnotatorId": 0,

"AnnotatorComment": {

"ParseQuality": "Complete",

"QuestionQuality": "Good",

"Confidence": "Normal",

"FreeFormComment": "First-round parse verification"

},

"Sparql": "PREFIX ns: <http://rdf.freebase.com/ns/>\nSELECT DISTINCT ?x\nWHERE {\nFILTER (?x != ns:m.03_r3)\nFILTER (!isLiteral(?x) OR lang(?x) = '' OR langMatches(lang(?x), 'en'))\nns:m.03_r3 ns:location.country.official_language ?x .\n}\n",

"PotentialTopicEntityMention": "jamaican",

"TopicEntityName": "Jamaica",

"TopicEntityMid": "m.03_r3",

"InferentialChain": [

"location.country.official_language"

],

"Constraints": [],

"Time": null,

"Order": null,

"Answers": [

{

"AnswerType": "Entity",

"AnswerArgument": "m.01428y",

"EntityName": "Jamaican English"

}

]

}

]

},… ]

}

To evaluate the performance of the system, the dataset was reshaped into conversations. The script for converting a dataset to a conversational dataset can be found under:

davtyana@tapoa/local/data/davtyana/aqqu/ConversationalData/create_data_set.pyThe script gathers the questions into groups according to its entities. In these groups, the first question does not change. In all of the following questions, the entities were replaced with a corresponding pronoun. The entity is defined in the dataset under TopicEntityName. For each entity, the script determines its gender and replaces the entity name with either he, she, it or there. To find out, which gender the entity belongs to, the script first looks for the entity name in the gender.csv file. If the name is not found, then the gender is guessed using the gender_guesser package.

The structure of the conversational dataset is:

{

"FreebaseVersion": "2015-08-09",

"Conversations": [

{

"TopicEntityMid": "m.076ltd",

"Questions": [

{

"QuestionId": "WebQTest-612",

"utterance": "who does jeremy shockey play for in 2012",

"Parses": [

{

"AnnotatorId": 3,

"ParseId": "WebQTest-612.P0",

"AnnotatorComment": {

"ParseQuality": "Complete",

"QuestionQuality": "Good",

"Confidence": "VeryLow",

"FreeFormComment": "?? in 2012 filter not added."

},

"Sparql": "PREFIX ns: <http://rdf.freebase.com/ns/>\nSELECT DISTINCT ?x\nWHERE {\nFILTER (?x != ns:m.076ltd)\nFILTER (!isLiteral(?x) OR lang(?x) = '' OR langMatches(lang(?x), 'en'))\nns:m.076ltd ns:base.schemastaging.athlete_extra.salary ?y .\n?y ns:base.schemastaging.athlete_salary.team ?x .\n}\n",

"InferentialChain": [

"base.schemastaging.athlete_extra.salary",

"base.schemastaging.athlete_salary.team"

],

"PotentialTopicEntityMention": "jeremy shockey",

"Answers": [

{

"AnswerArgument": "m.01y3c",

"EntityName": "Carolina Panthers",

"AnswerType": "Entity"

}

],

"TopicEntityName": "Jeremy Shockey",

"Time": null,

"TopicEntityMid": "m.076ltd",

"Order": null,

"Constraints": []

}

],

"results": [

"Carolina Panthers"

],

"targetOrigSparql": "PREFIX ns: <http://rdf.freebase.com/ns/>\nSELECT DISTINCT ?x\nWHERE {\nFILTER (?x != ns:m.076ltd)\nFILTER (!isLiteral(?x) OR lang(?x) = '' OR langMatches(lang(?x), 'en'))\nns:m.076ltd ns:base.schemastaging.athlete_extra.salary ?y .\n?y ns:base.schemastaging.athlete_salary.team ?x .\n}\n",

"RawQuestion": "who does jeremy shockey play for in 2012?",

"ProcessedQuestion": "who does jeremy shockey play for in 2012",

"id": 1

}

]

}, … ]}

Results evaluation

The code from

https://ad-git.informatik.uni-freiburg.de/ad/aqqu-webserverwas taken as a base and adapted for the conversational dataset. All of the questions and answers in the evaluation were taken from the original dataset (WebQSP), used to train Aqqu. The adapted code can be found under:

https://ad-git.informatik.uni-freiburg.de/ad/aqqu-webserver/tree/conversational_no_genderEvaluation metrics

- q1, … ,qn: questions

- c1, … ,ci: the answer candidates

- g1, … ,gn: gold answers

- a1, … ,an: the answers from the system for the first candidate

- GA-Size: Gold answer size is the number of ground truth answers \((size([g1, g2, … ,gn]))\).

- BCA-Size: Best candidate answer size is the number of answers of the first candidate \((size([a1, a2, …,an]))\).

- Candidates: The number of all predicted candidates \((size([c1, c2,…, ci]))\).

- Precision: The precision shows what percentage of the answers from the best candidate are correct.

\[\text{Precison} = \frac{\text{TP}}{\text{TP + FP}},\] where TP is a true positive, i.e. \(TP = size(ak,…,am)\), where \(ak,…,am\) are correct answers and FP is a false positive, i.e. \(FP = size(al,…,ap)\), where \(al,…,ap\) are false answers.

For example:

Only one answer out of two is correct and only one correct answer is found.Utterance GA-size GA BCA-Size BCA who does ronaldinho play for now 2011? 2 "Brazil national football team"

"Clube de Regatas do Flamengo"2 "Clube Atlético Mineiro"

"Clube de Regatas do Flamengo"

Therefore \(TP = 1\), \(FP = 1\), \(Precision = 1/(1+1) = 0.5\). - Recall: The recall measures how well the system finds correct answers, i.e. what percentage of correct answers are found.

\[\text{Recall} = \frac{\text{TP}}{\text{TP + FN}},\]

where FN is false negative, i.e. \(FN = size(gl,…,gp)\), where \(gl,…,gp\) are correct answers that were not found by the system.

For example:

There are two answers found, but in the Gold-Answer list there is only one correct answer.Utterance GA-size GA BCA-Size BCA What state does romney live in? 1 "Massachusetts" 2 "Massachusetts"

"Bloomfield Hills"

\(TP = 1\), \(FN = 0\), \(Recall = 1/(1+0) = 1\) - F1: It is the harmonic average of the precision and recall.

The best value is 1 (Precision = 1 and Recall = 1) and the worst is 0 (Precision → 0 and Recall → 0). \[\text{F1} = 2\frac{\text{Precison}\cdot\text{Recall}}{\text{Precision}+\text{Recall}}\] - Parse Match: This parameters shows if the candidate relation that gives an answer with the best F1 score is matched perfectly to the ground truth (>0.99 matching).

Averaged evaluation metrics

- Questions: Total number of questions in the evaluation dataset.

- Average Precision: The average precision of all questions.

- Average Recall: The average recall of all questions.

- Average F1: The average F1 across all questions.

\[\text{average F1} = \frac{1}{n}\displaystyle\sum_{i=1}^{n} F1(g_i, a_i)\] - Accuracy: The percentage of queries answered with the exact gold answer.

\[\text{accuracy} = \frac{1}{n}\displaystyle\sum_{i=1}^{n} I(g_i = a_i)\] - Parse Accuracy: Average parse match across all questions.

Experiments

Let us look at the evaluation results of a very small dataset (he_data_tiny.json, consists of 14 questions).

| ID | Utterance | GA-Size | BCA-Size | Candidates | Precision | Recall | F1 | Parse Match |

|---|---|---|---|---|---|---|---|---|

| 0 | what time zone is chicago in right now? | 1 | 1 | 3 | 1 | 1 | 1 | TRUE |

| 1 | where to stay there tourist? | 1 | 1 | 21 | 1 | 1 | 1 | TRUE |

| 2 | who does ronaldinho play for now 2011? | 2 | 2 | 21 | 0.5 | 0.5 | 0.5 | FALSE |

| 3 | what is ella fitzgerald parents name? | 2 | 2 | 9 | 1 | 1 | 1 | TRUE |

| 4 | what state does romney live in? | 1 | 2 | 10 | 0.5 | 1 | 0.67 | FALSE |

| 5 | where did his parents come from? | 1 | 1 | 31 | 1 | 1 | 1 | TRUE |

| 6 | what university did he graduated from? | 1 | 6 | 21 | 0 | 0 | 0 | FALSE |

| 7 | where did he graduated college? | 1 | 6 | 19 | 0 | 0 | 0 | FALSE |

| 8 | what colleges did he attend? | 5 | 6 | 1 | 0 | 0 | 0 | FALSE |

| 9 | when did he become governor? | 1 | 1 | 6 | 0 | 0 | 0 | FALSE |

| 10 | where is his family from? | 1 | 1 | 8 | 0.5 | 1 | 0.67 | FALSE |

| 11 | what degrees does he have? | 3 | 6 | 1 | 0 | 0 | 0 | FALSE |

| 12 | who does jeremy shockey play for in 2012? | 1 | 1 | 19 | 1 | 1 | 1 | TRUE |

| 13 | what does bolivia border? | 5 | 5 | 10 | 1 | 1 | 1 | FALSE |

Average results for he_data_tiny dataset.

| Questions | 14 |

| Average Precision | 0.65 |

| Average Recall | 0.82 |

| Average F1 | 0.689 |

| Accuracy | 0.500 |

| Parse Accuracy | 0.357 |

Two experiments were conducted to make a comparison between a system with and without context tracking.

Without context tracking

- data with conversational structure and without pronoun replacement

- without gender identification

- not trained with context tracking

- used

conversations_WebQSP.json

With context tracking

- data with conversational structure and with pronoun replacement

- without gender identification

- not trained with context tracking

- used

he_data.json

| Parameter | Without context tracking | With context tracking |

|---|---|---|

| Questions | 1815 | 1815 |

| Average Precision | 0.67 | 0.54 |

| Average Recall | 0.72 | 0.58 |

| Average F1 | 0.657 | 0.527 |

| Accuracy | 0.478 | 0.378 |

| Parse Accuracy | 0.510 | 0.388 |

Experiments Analysis

In the evaluation results, we can see that the performance of a conversational system is inferior, when compared to a non-conversational one. Possible reasons for less accurate results could be:

- For each query with a pronoun, the system has too many identified entities (all entities from the question and all entities from the first answer candidate). Therefore, in some cases, the system can fail to estimate which candidate is more valid and thus eliminates a correct candidate.

- The system was trained on non-conversational data.

Future work

- Implement gender identification – a system, which will sort and store entities with different genders separately. This may obviate the problem with the big number of non-validly identified entities.

- Train the system with context tracking.

- Implement a data augmentation functionality in the Aqqu Chatbot.

- Evaluate the system with different combinations of questions and conversations.

Useful links

Aqqu website:

http://aqqu.informatik.uni-freiburg.deInformation on how to train, build and run the Aqqu system backend:

https://ad-git.informatik.uni-freiburg.de/ad/AqquAqqu backend with the conversation tracking functionality:

https://ad-git.informatik.uni-freiburg.de/ad296/Aqqu/tree/conversationalThe Aqqu chatbot:

https://ad-git.informatik.uni-freiburg.de/ad296/AqquChatterbot/tree/noGenderThe script for converting a dataset to a conversational dataset:

davtyana@tapoa/local/data/davtyana/aqqu/ConversationalData/create_data_set.pyThe evaluation code:

https://ad-git.informatik.uni-freiburg.de/ad/aqqu-webserver/tree/conversational_no_genderReferences

- Hannah Bast, Elmar Haussmann. More Accurate Question Answering on Freebase. Department of Computer Science, University of Freiburg, Freiburg, Germany